A Laravel + Statamic Performance Deep Dive

Your website is live, but is it fast? In today's digital landscape, performance isn't just a "nice-to-have"—it's a critical requirement for success. You have mere seconds to capture a visitor's attention, and a slow-loading page is the fastest way to lose it.

Forget abstract metrics and confusing jargon. This article is about one thing: raw speed. We'll walk through the practical steps to dramatically boost the performance of any Laravel application, using a powerful full-page static caching setup that delivers content almost instantly. Let's make your website faster.

Page Speed is the most important metric.

Before we dive into the how, let's talk about the why. Page speed isn't just a technical vanity metric; it has a direct and measurable impact on user behavior, engagement, and your bottom line.

Studies from Google, Amazon, and others consistently show that even small delays can have a massive negative effect. A slow site frustrates users, leading them to abandon your page (a "bounce") before it even finishes loading.

Here's a breakdown of the correlation:

- Bounce Rate: As page load time goes from 1 second to 3 seconds, the probability of a user bouncing increases by 32%. If it goes to 5 seconds, the probability jumps to 90%.

- Conversion Rate: For e-commerce sites, delays can be devastating. Amazon found that every 100ms of latency cost them 1% in sales.

- User Satisfaction: A snappy, responsive website feels more professional and trustworthy. It leaves a positive impression, encouraging users to return.

Think of it like this:

Page Load Time (s) | User Satisfaction & Retention

--------------------|-----------------------------------

< 1.0s | 😍 Retained (Smooth experience)

1.0s - 2.5s | 😊 Mostly Retained (Acceptable)

2.5s - 4.0s | 😐 At Risk (Noticeably slow)

4.0s | 😠 Lost (Frustrating, likely to leave)

In short, a faster website leads to happier users, higher engagement, and better outcomes for your goals.

Getting started: Measure Current Performance

Don't optimize blindly; benchmark to identify bottlenecks!

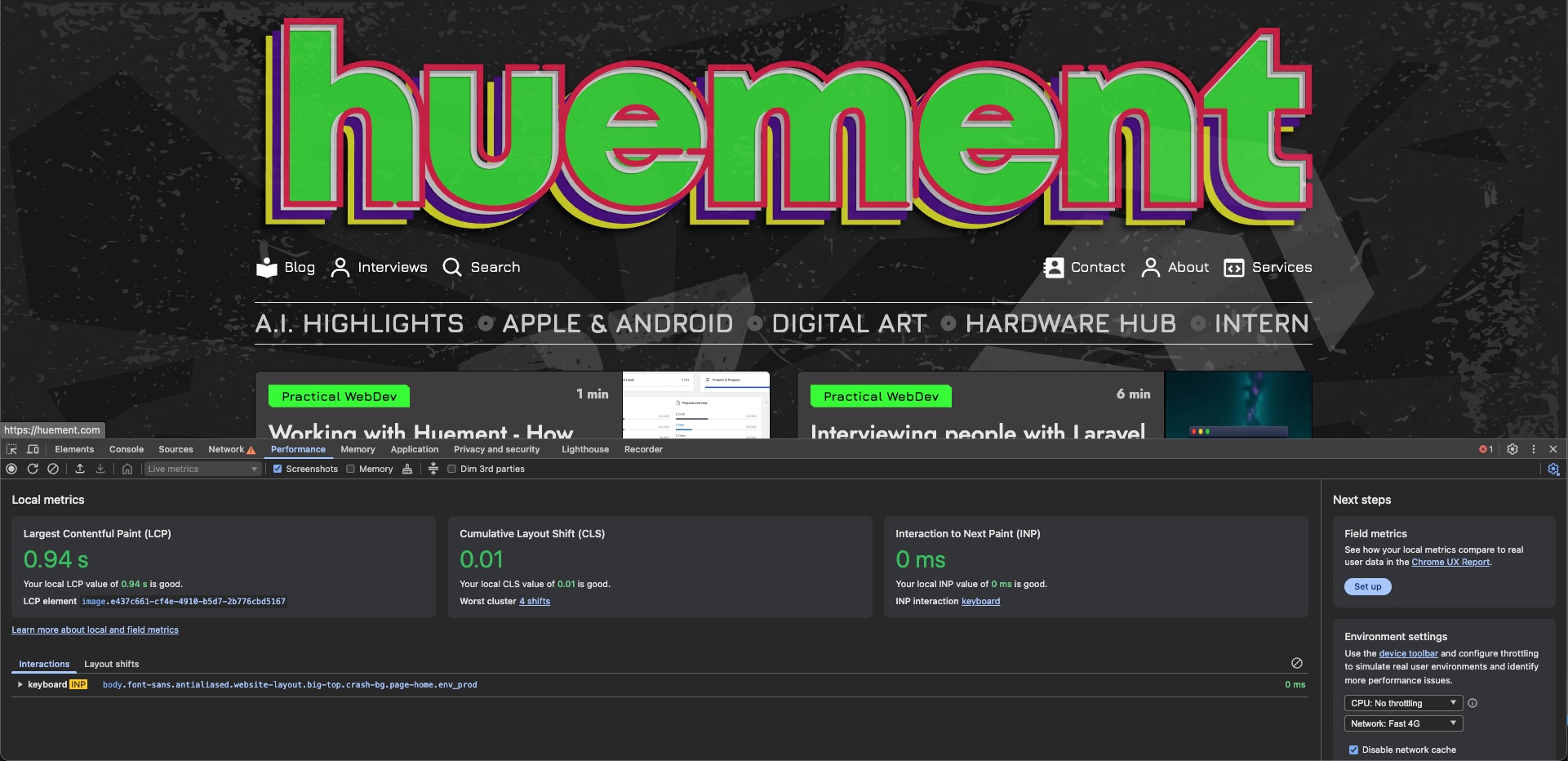

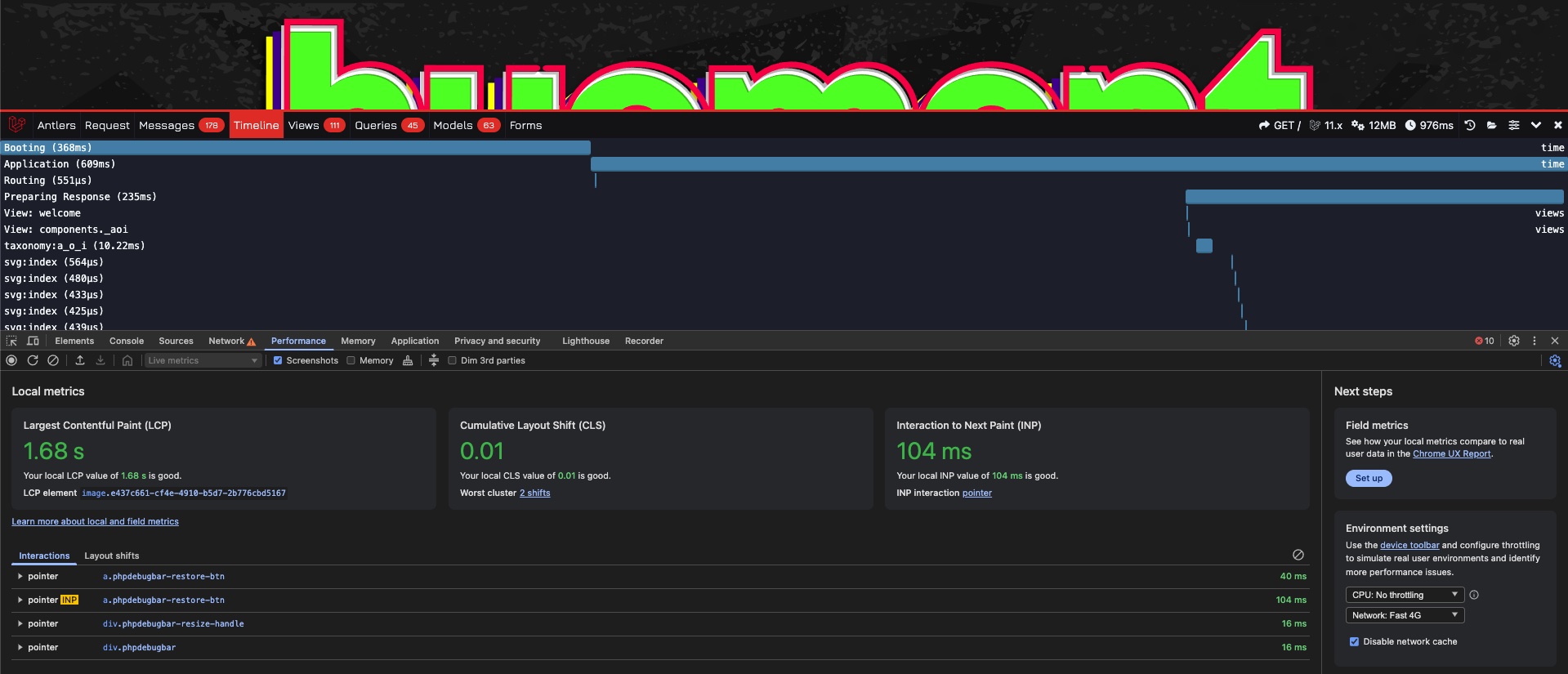

To measure our starting point, we need some tools. I'm using Google Chrome's Developer Tools (specifically the Lighthouse audit), but Firefox and Safari have similar features. Additionally, the Laravel Debugbar is invaluable for inspecting queries and view composition times, while htop on the server helps monitor resource usage.

Here's our initial, unoptimized score. It's not terrible, but there's a lot of room for improvement.

CAPTION: Our starting point. Plenty of work to do!

What to Look For:

- Server Response Time > 200ms? This could indicate unoptimized PHP code or slow database queries.

- High Query Count/Time? A clear database-related bottleneck. Look for N+1 problems or unindexed columns.

- Large Page Sizes? Your assets (images, CSS, JS) are too big. This points to a need for compression or a CDN.

- If scores are below 80, prioritize the top suggestions from the Lighthouse report, like compressing images or eliminating render-blocking resources.

We'll re-test after each major optimization to see its impact.

Our High-Performance Stack Explained

Before we get into the code, let's understand the system we're building. The goal is to create a full static page cache. This means that instead of running PHP and hitting the database for every single page visit, we'll serve a pre-built HTML file directly from the server's disk. This is, by far, the fastest way to serve a web page.

Here are the key components and how they work together:

-

Laravel Forge: Forge is a server provisioning and management tool that makes it incredibly easy to configure and deploy PHP applications. We use it to manage our server, configure NGINX, and, most importantly, run Daemons and Deployment Scripts. A daemon is a background process that we'll use to constantly process jobs. Forge costs start at $12/month for the Hobby plan.

-

Redis: Redis is an open-source, in-memory data store. While it can be used as a database or cache, we're using it here as a highly efficient queue driver. When our deployment script needs to warm the cache, it doesn't do it right away. Instead, it pushes a "job" for each page onto a Redis queue. This makes our deployments incredibly fast, as the script finishes in seconds.

-

Statamic: Our content management system. Statamic has a powerful built-in static caching engine. We'll configure it to generate a static HTML file for every single page on our site and place it in a specific directory.

-

NGINX: Our web server. We will configure NGINX to be "cache-aware." For every incoming request, NGINX will first check if a static HTML file exists for that URL. If it does, it serves it immediately without ever touching PHP. If it doesn't, it passes the request to PHP to be handled dynamically.

The workflow is simple and powerful:

- A developer pushes new code to the repository.

- Forge detects the push and runs the deployment script.

- The script clears old caches and queues up jobs in Redis to warm the new static cache.

- A daemon, managed by Forge, picks up these jobs and tells Statamic to generate the static HTML files.

- NGINX serves these lightning-fast static files to all incoming visitors.

The Implementation Details

Server Configuration (NGINX)

A correctly configured NGINX file is the heart of our static cache. We need to tell it to use modern compression like Brotli, set long cache headers for assets, and most importantly, use try_files to look for our static pages before hitting PHP.

This snippet from /etc/nginx/sites-available/yoursite.com shows the most critical parts.

# Main routing with Statamic full static caching

location / {

# Try static cache files first, including query strings

try_files /static${uri}_$args.html /static${uri}.html /static${uri}/index.html $uri $uri/ /index.php?$query_string;

}

Serve static cache files directly

This location is primarily for verification but also ensures correct headers

location /static/ {

alias /home/forge/yoursite.com/public/static/;

expires 1y;

add_header Cache-Control "public, immutable";

add_header X-Content-Type-Options nosniff;

access_log off;

}

Static assets caching (long-lived) for CSS, JS, images, etc.

location ~* .(?:ico|css|js|gif|jpe?g|png|woff2?|eot|ttf|svg|webp)$ {

expires 1y;

access_log off;

add_header Cache-Control "public";

try_files $uri $uri/ /index.php?$query_string;

}

PHP-FPM for dynamic content (the fallback)

location ~ .php$ {

fastcgi_split_path_info ^(.+.php)(/.+)$;

fastcgi_pass unix:/var/run/php/php8.3-fpm.sock;

fastcgi_index index.php;

include fastcgi_params;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

# We disable NGINX's own fastcgi_cache because we are handling it at the application level

# with full static files, which is even faster.

fastcgi_no_cache 1;

fastcgi_cache_bypass 1;

}

What about Apache?

While Huement is running on NGINX, here is a configuration that should achieve a similar result for Apache in your .htaccess or virtual host file.

# Enable mod_rewrite

RewriteEngine On

First, try to serve static cache files if they exist

RewriteCond %{REQUEST_METHOD} GET

RewriteCond %{QUERY_STRING} ^$

RewriteCond %{HTTP_COOKIE} !statamic_session

RewriteCond %{HTTP_COOKIE} !statamic_token

RewriteCond %{DOCUMENT_ROOT}/static%{REQUEST_URI}/index.html -f

RewriteRule ^(.*)$ /static%{REQUEST_URI}/index.html [L]

RewriteCond %{REQUEST_METHOD} GET

RewriteCond %{QUERY_STRING} ^$

RewriteCond %{HTTP_COOKIE} !statamic_session

RewriteCond %{HTTP_COOKIE} !statamic_token

RewriteCond %{DOCUMENT_ROOT}/static%{REQUEST_URI}.html -f

RewriteRule ^(.*)$ /static%{REQUEST_URI}.html [L]

Normal Laravel front controller

RewriteCond %{REQUEST_FILENAME} !-d

RewriteCond %{REQUEST_FILENAME} !-f

RewriteRule ^ index.php [L]

Set long cache headers for assets

<IfModule mod_expires.c>

ExpiresActive On

ExpiresByType image/jpeg "access plus 1 year"

ExpiresByType image/png "access plus 1 year"

ExpiresByType image/webp "access plus 1 year"

ExpiresByType text/css "access plus 1 year"

ExpiresByType application/javascript "access plus 1 year"

</IfModule>

Testing with cURL

curl is a fantastic tool for verifying that your server is behaving as expected. By checking the response headers, you can confirm if compression and caching are working.

# The -I flag gets headers, -L follows redirects, and --compressed requests compression

curl -I -L --compressed -H "Accept-Encoding: br" [https://yoursite.com/blog](https://yoursite.com/blog)

HTTP/2 200

server: nginx/1.28.0

date: Sat, 23 Aug 2025 19:38:44 GMT

content-type: text/html; charset=utf-8

content-encoding: br

The content-encoding: br header confirms that Brotli compression is working!

Application Configuration (Laravel & Statamic)

Fix Closure Routes for Caching

Many tutorials show closure-based routes for simple pages. While convenient, they have a major drawback: they cannot be cached using php artisan route:cache, a critical optimization for production. Always use controller methods instead. Closure-based routes (e.g., Route::get('/community', function () { return view('community'); })) prevent php artisan route:cache from working, which speeds up route resolution. Convert closures to controller methods:

// BEFORE: This cannot be cached.

Route::get('/community', function () {

return view('community');

})->name('community');

// AFTER: This can be cached.

Route::get('/community', [StaticPageController::class, 'community'])->name('community');

Run php artisan route:cache after converting routes to boost performance.

It takes only a few extra seconds to run php artisan make:controller StaticPageController and is well worth the performance gain.

Configure Statamic's Static Cache

Getting Statamic's full-page cache working involves a few steps:

1. Set .env variables: Ensure your APP_URL is set correctly and define your caching strategy.

STATAMIC_STATIC_CACHING_STRATEGY=full

STATAMIC_STATIC_CACHING_LOCK_ENABLED=false

2. Configure config/statamic/static_caching.php: Define which URLs, templates, and content types should be cached.

- Use the Queue: Tell Statamic to use the queue for warming the cache so your deployments aren't held up.

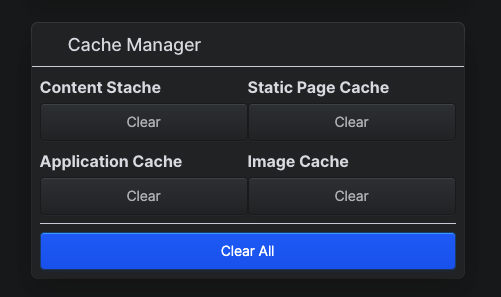

Statamic Cache Widget

While this doesnt directly speed up your site, having this handy widget for clearing both the Stache and Static Cache is incredibly helpful! The one I am using in the image below can be found here: https://statamic.com/addons/stoffelio/widget-cache-controller.

It allows you to quickly clear out your cache as you're making changes.

Statamic Static + Stache

There is actually quite a lot going on with Statamic caching. Way to much for this blog article. Instead there is a much deeper and more thorough coverage of the topic being planned for a future article, so follow us on social media or subscribe to our RSS feed to stay up to date and get an alert when that comes out.

Custom Cacheable Middleware

Instead of caching everything via FastCGI, create a custom middleware to selectively cache pages. This is more flexible and ensures only appropriate pages are cached.

namespace App\Http\Middleware;

use Closure;

use Illuminate\Support\Facades\Cache;

class Cacheable

{

public function handle($request, Closure $next)

{

if ($request->isMethod('GET') && !$request->hasCookie('statamic_session')) {

$key = 'page_' . md5($request->fullUrl());

return Cache::remember($key, now()->addHours(1), fn () => $next($request));

}

return $next($request);

}

}

Redis for Caching

Redis (which stands for REmote DIctionary Server) is an open-source, in-memory data store. The key phrase here is "in-memory," which means it stores data in your server's RAM instead of on a slower disk drive (like a traditional database such as MySQL). This makes accessing data from Redis incredibly fast—often in milliseconds or less.

Think of it like this: A traditional database is like a filing cabinet in a separate room. To get information, your application has to walk to the room, find the right cabinet, open the drawer, and search for the file. Redis is like having the most important files spread out on your desk, right in front of you. Access is nearly instantaneous.

$authorDetails = Cache::remember(

$detailsCacheKey,

now()->addHours(24),

function () use ($authorId) {

return StatamicBlog::getAuthorDetails($authorId, 2);

}

);

Basically, anywhere you are doing a database query, you want to use your Redis server to cache that.

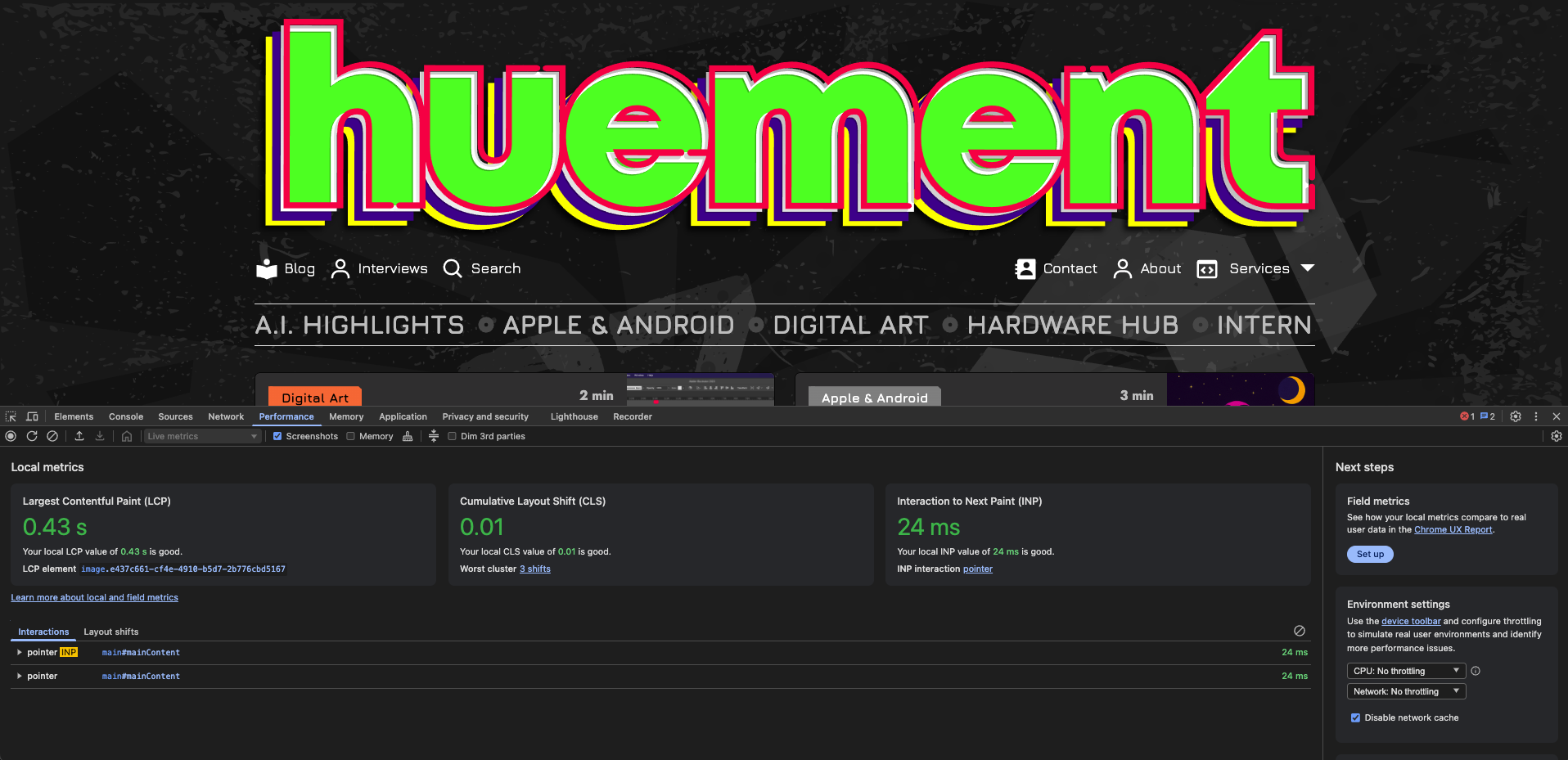

CAPTION: At this point you should be seeing under 1 second LCP times. Since we are serving static html to our visitors.

Continuous Integration Pipeline: The Forge Deployment Script

This is where the magic happens. In your site's dashboard in Laravel Forge, you can edit the deployment script. This script runs every single time you push a change to your repository. Ours will clear all the old caches, rebuild the necessary ones, and then queue the static cache warming to run in the background.

Forge Deployment Log

This is what a successful deployment looks like. Notice how it queues 63 jobs and completes almost instantly. The actual work of generating the HTML files happens in the background without slowing down the deployment.

⠒ Warming the Stache...

INFO You have poured oil over the Stache and polished it until it shines. It is warm and ready.

🌐 Queuing static cache warming...

Please wait. This may take a while if you have a lot of content.

Compiling URLs...

[✔] Entries

[✔] Taxonomies

[✔] Taxonomy terms

[✔] Custom routes

[✔] Additional

Adding 63 requests onto queue...

INFO All requests to warm the static cache have been added to the queue.

📋 Cache warming jobs have been queued and will run in the background.

✅ Deployment completed successfully!

WOOO BABY! YOU’RE A BEAST!!!

me talking to my editor after getting full page static caching working

The Full Deployment Script

Add this to your deployment script editor in Forge.

# Change directory into the project

cd $FORGE_SITE_PATH

Install composer dependencies

$FORGE_COMPOSER install --no-interaction --prefer-dist --optimize-autoloader

Run database migrations

$FORGE_PHP artisan migrate --force

echo "🧹 Clearing all caches..."

$FORGE_PHP artisan cache:clear

$FORGE_PHP artisan config:clear

$FORGE_PHP artisan route:clear

$FORGE_PHP artisan view:clear

$FORGE_PHP artisan statamic:stache:clear

$FORGE_PHP artisan statamic:static:clear

echo "⚡ Re-caching configurations..."

$FORGE_PHP artisan config:cache

$FORGE_PHP artisan route:cache

$FORGE_PHP artisan view:cache

echo "🔥 Warming Statamic's Stache cache..."

$FORGE_PHP artisan statamic:stache:warm

echo "🌐 Queuing static cache warming..."

Queue the static cache warming to run in the background.

This makes deployments super fast!

$FORGE_PHP artisan statamic:static:warm --queue

echo "📋 Cache warming jobs have been queued."

echo "The daemon will process them in the background."

Production Setup: How It All Works Together

The optimized setup combines NGINX, Laravel, Statamic, Redis, and Forge into a high-performance system. Here’s how each component contributes:

- Static Cache (Statamic): Pre-rendered HTML files in storage/statamic/static/ are served directly by NGINX, bypassing PHP and database queries for instant page loads.

- Queue-Based Cache Warming (Redis + Forge): After each deployment, Forge queues cache-warming jobs using Redis, ensuring static files are generated without delaying deployments.

- Fast Deployments (Forge): The deployment script clears old caches, re-caches configurations, and triggers background cache warming for seamless updates.

- Monitoring (Forge): The check-queue.sh script and Forge’s dashboard provide visibility into queue status and server health.

Performance Results

Before: First load times of 25+ seconds, subsequent loads ~0.5s due to unoptimized routes and assets.

After: Instant page loads (<200ms) for all pages, thanks to static caching and optimized NGINX.

Thats what we call, Progressive Performance Improvements, or progress not perfection.

The Final Result

After implementing this entire stack, the performance improvement is staggering.

Before: ~1-2 second server response times, with Lighthouse scores in the 70s.

After: ~10-20 millisecond server response times. Pages load instantly for all users.

The website is now running at a production-level performance standard. The cache automatically rebuilds after each deployment, and users will always get lightning-fast page loads. This is exactly how a high-performance website should work.

Huement.com is now running at production-level performance! 🚀

The cache will automatically rebuild after each deployment, and your users will always get lightning-fast page loads. This is exactly how a high-performance website should work!

Getting this set up is a huge win! 🎉

Comments

No Comments Yet!

Would you like to be the first?

Comment Moderation is ON for this post. All comments must be approved before they will be visible.